The Reproducible Builds project relies on several projects, supporters and sponsors for financial support, but they are also valued as ambassadors who spread the word about our project and the work that we do.

The Reproducible Builds project relies on several projects, supporters and sponsors for financial support, but they are also valued as ambassadors who spread the word about our project and the work that we do.

This is the fourth instalment in a series featuring the projects, companies and individuals who support the Reproducible Builds project.

We started this series by

featuring the Civil Infrastructure Platform project and followed this up with a

post about the Ford Foundation as well as a recent ones

about ARDC and the

Google Open Source Security Team (GOSST). Today, however, we will be talking with Jan Nieuwenhuizen about Bootstrappable Builds, GNU Mes and GNU Guix.

Chris Lamb: Hi Jan, thanks for taking the time to talk with us today. First, could you briefly tell me about yourself?

Jan: Thanks for the chat; it s been a while! Well, I ve always been trying to find something new and interesting that is just asking to be created but is mostly being overlooked. That s how I came to work on

GNU Guix and create

GNU Mes to address the bootstrapping problem that we have in free software. It s also why I have been working on releasing

Dezyne, a programming language and set of tools to specify and formally verify concurrent software systems as free software.

Briefly summarised, compilers are often written in the language they are compiling. This creates a chicken-and-egg problem which leads users and distributors to rely on opaque, pre-built binaries of those compilers that they use to build newer versions of the compiler. To gain trust in our computing platforms, we need to be able to tell how each part was produced from source, and opaque binaries are a threat to user security and user freedom since they are not auditable. The goal of bootstrappability (and the

bootstrappable.org project in particular) is to minimise the amount of these bootstrap binaries.

Anyway, after studying Physics at Eindhoven University of Technology (TU/e), I worked for

digicash.com, a startup trying to create a digital and anonymous payment system sadly, however, a traditional account-based system won. Separate to this, as there was no software (either free or proprietary) to automatically create beautiful music notation, together with Han-Wen Nienhuys, I created

GNU LilyPond. Ten years ago, I took the initiative to

co-found a democratic school in Eindhoven based on the principles of

sociocracy. And last Christmas I finally went vegan, after being mostly vegetarian for about 20 years!

Chris: For those who have not heard of it before, what is GNU Guix? What are the key differences between Guix and other Linux distributions?

Jan: GNU Guix is both a package manager and a full-fledged GNU/Linux distribution. In both forms, it provides state-of-the-art package management features such as transactional upgrades and package roll-backs, hermetical-sealed build environments, unprivileged package management as well as per-user profiles. One obvious difference is that Guix forgoes the usual

Filesystem Hierarchy Standard (ie.

/usr,

/lib, etc.), but there are other significant differences too, such as Guix being scriptable using

Guile/Scheme, as well as Guix s dedication and focus on free software.

Chris: How does GNU Guix relate to

GNU Mes? Or, rather, what problem is Mes attempting to solve?

Jan: GNU Mes was created to address the security concerns that arise from bootstrapping an operating system such as Guix. Even if this process entirely involves free software (i.e. the source code is, at least, available), this commonly uses large and unauditable binary blobs.

Mes is a Scheme interpreter written in a simple subset of C and a C compiler written in Scheme, and it comes with a small, bootstrappable C library. Twice, the Mes bootstrap has halved the size of opaque binaries that were needed to bootstrap GNU Guix. These reductions were achieved by first replacing

GNU Binutils,

GNU GCC and the

GNU C Library with Mes, and then replacing Unix utilities such as

awk,

bash,

coreutils,

grep sed, etc., by Gash and Gash-Utils. The final goal of Mes is to help create a full-source bootstrap for any interested UNIX-like operating system.

Chris: What is the current status of Mes?

Jan: Mes supports all that is needed from

R5RS and

GNU Guile to run MesCC with Nyacc, the C parser written for Guile, for 32-bit x86 and ARM. The next step for Mes would be more compatible with Guile, e.g., have

guile-module support and support running Gash and Gash Utils.

In working to create a full-source bootstrap, I have disregarded the kernel and Guix build system for now, but otherwise, all packages should be built from source, and obviously, no binary blobs should go in. We still need a Guile binary to execute some scripts, and it will take at least another one to two years to remove that binary. I m using the 80/20 approach, cutting corners initially to get something working and useful early.

Another metric would be how many architectures we have. We are quite a way with ARM, tinycc now works, but there are still problems with GCC and Glibc. RISC-V is coming, too, which could be another metric. Someone has looked into picking up NixOS this summer. How many distros do anything about reproducibility or bootstrappability? The bootstrappability community is so small that we don t need metrics, sadly. The number of bytes of binary seed is a nice metric, but running the whole thing on a full-fledged Linux system is tough to put into a metric. Also, it is worth noting that I m developing on a modern Intel machine (ie. a platform with a

management engine), that s another key component that doesn t have metrics.

Chris: From your perspective as a Mes/Guix user and developer, what does reproducibility mean to you? Are there any related projects?

Jan: From my perspective, I m more into the problem of bootstrapping, and reproducibility is a prerequisite for bootstrappability. Reproducibility clearly takes a lot of effort to achieve, however. It s relatively easy to install some Linux distribution and be happy, but if you look at communities that really care about security, they are investing in reproducibility and other ways of improving the security of their supply chain. Projects I believe are complementary to Guix and Mes include

NixOS,

Debian and on the hardware side the

RISC-V platform shares many of our core principles and goals.

Chris: Well, what are these principles and goals?

Jan: Reproducibility and bootstrappability often feel like the next step in the frontier of free software. If you have all the sources and you can t reproduce a binary, that just doesn t feel right anymore. We should start to desire (and demand) transparent, elegant and auditable software stacks. To a certain extent, that s always been a low-level intent since the beginning of free software, but something clearly got lost along the way.

On the other hand, if you look at the

NPM or

Rust ecosystems, we see a world where people directly install binaries. As they are not as supportive of copyleft as the rest of the free software community, you can see that movement and people in our area are doing more as a response to that so that what we have continues to remain free, and to prevent us from falling asleep and waking up in a couple of years and see, for example, Rust in the Linux kernel and (more importantly) we require big binary blobs to use our systems. It s an excellent time to advance right now, so we should get a foothold in and ensure we don t lose any more.

Chris: What would be your ultimate reproducibility goal? And what would the key steps or milestones be to reach that?

Jan: The ultimate goal would be to have a system built with open hardware, with all software on it fully bootstrapped from its source. This bootstrap path should be easy to replicate and straightforward to inspect and audit. All fully reproducible, of course! In essence, we would have solved the supply chain security problem.

Our biggest challenge is ignorance. There is much unawareness about the importance of what we are doing. As it is rather technical and doesn t really affect everyday computer use, that is not surprising. This unawareness can be a great force driving us in the opposite direction. Think of Rust being allowed in the Linux kernel, or Python being required to build a recent

GNU C library (glibc). Also, the fact that companies like Google/Apple still want to play us vs them , not willing to to support GPL software. Not ready yet to truly support user freedom.

Take the infamous

log4j bug everyone is using open source these days, but nobody wants to take responsibility and help develop or nurture the community. Not ecosystem , as that s how it s being approached right now: live and let live/die: see what happens without taking any responsibility. We are growing and we are strong and we can do a lot but if we have to work against those powers, it can become problematic. So, let s spread our great message and get more people involved!

Chris: What has been your biggest win?

Jan: From a technical point of view, the

full-source bootstrap has have been our biggest win. A talk by Carl Dong at the 2019

Breaking Bitcoin conference stated that connecting

Jeremiah Orian s Stage0 project to Mes would be the holy grail of bootstrapping, and we recently managed to achieve just that: in other words, starting from

hex0, 357-byte binary, we can now build the entire Guix system.

This past year we have not made significant

visible progress, however, as our funding was unfortunately not there. The Stage0 project has advanced in RISC-V. A month ago, though, I secured

NLnet funding for another year, and thanks to NLnet, Ekaitz Zarraga and Timothy Sample will work on GNU Mes and the Guix bootstrap as well. Separate to this, the bootstrappable community has grown a lot from two people it was six years ago: there are now currently over 100 people in the

#bootstrappable IRC channel, for example. The enlarged community is possibly an even more important win going forward.

Chris: How realistic is a 100% bootstrappable toolchain? And from someone who has been working in this area for a while, is solving

Trusting Trust) actually feasible in reality?

Jan: Two answers: Yes and no, it really depends on your definition. One great thing is that the whole

Stage0 project can also run on the Knight virtual machine, a hardware platform that was designed, I think, in the 1970s. I believe we can and must do better than we are doing today, and that there s a lot of value in it.

The core issue is not the trust; we can probably all trust each other. On the other hand, we don t want to trust each other or even ourselves. I am not, personally, going to inspect my RISC-V laptop, and other people create the hardware and do probably not want to inspect the software. The answer comes back to being conscientious and doing what is right. Inserting GCC as a binary blob is not right. I think we can do better, and that s what I d like to do. The security angle is interesting, but I don t like the paranoid part of that; I like the beauty of what we are creating together and stepwise improving on that.

Chris: Thanks for taking the time to talk to us today. If someone wanted to get in touch or learn more about GNU Guix or Mes, where might someone go?

Jan: Sure! First, check out:

I m also on Twitter (

@janneke_gnu) and on

octodon.social (

@janneke@octodon.social).

Chris: Thanks for taking the time to talk to us today.

Jan: No problem. :)

For more information about the Reproducible Builds project, please see our website at

reproducible-builds.org. If you are interested in

ensuring the ongoing security of the software that underpins our civilisation

and wish to sponsor the Reproducible Builds project, please reach out to the

project by emailing

contact@reproducible-builds.org.

In Mexico, we have the great luck to live among vestiges of long-gone

cultures, some that were conquered and in some way got adapted and

survived into our modern, mostly-West-Europan-derived society, and

some that thrived but disappeared many more centuries ago. And

although not everybody feels the same way, in my family we have always

enjoyed visiting archaeological sites when I was a child and today.

Some of the regulars that follow this blog (or its syndicators) will

remember Xochicalco, as it was the destination we chose for the

daytrip back in the day, in DebConf6 (May 2006).

In Mexico, we have the great luck to live among vestiges of long-gone

cultures, some that were conquered and in some way got adapted and

survived into our modern, mostly-West-Europan-derived society, and

some that thrived but disappeared many more centuries ago. And

although not everybody feels the same way, in my family we have always

enjoyed visiting archaeological sites when I was a child and today.

Some of the regulars that follow this blog (or its syndicators) will

remember Xochicalco, as it was the destination we chose for the

daytrip back in the day, in DebConf6 (May 2006).

This year had a lot of things happen in it, world-wide, as is always the case.

Being more selfish here are the things I remember, in brief unless there are comments/questions:

This year had a lot of things happen in it, world-wide, as is always the case.

Being more selfish here are the things I remember, in brief unless there are comments/questions:

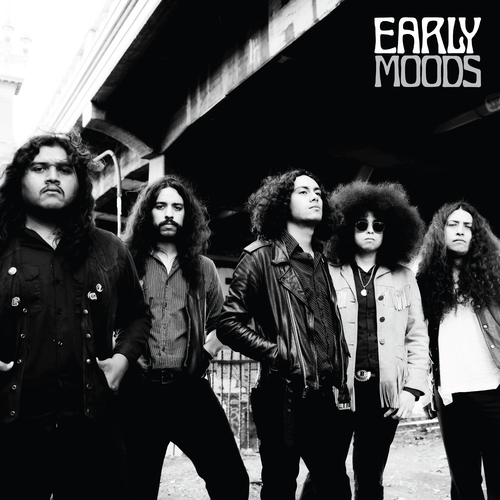

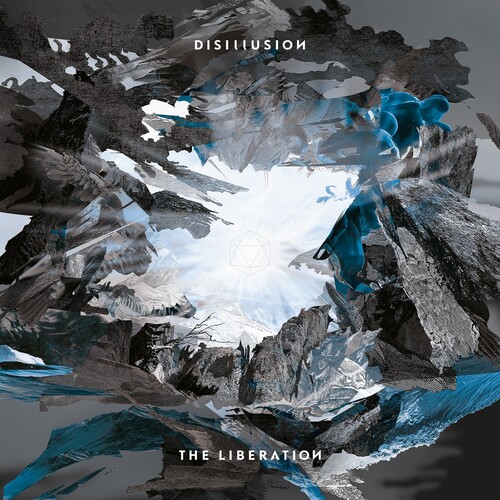

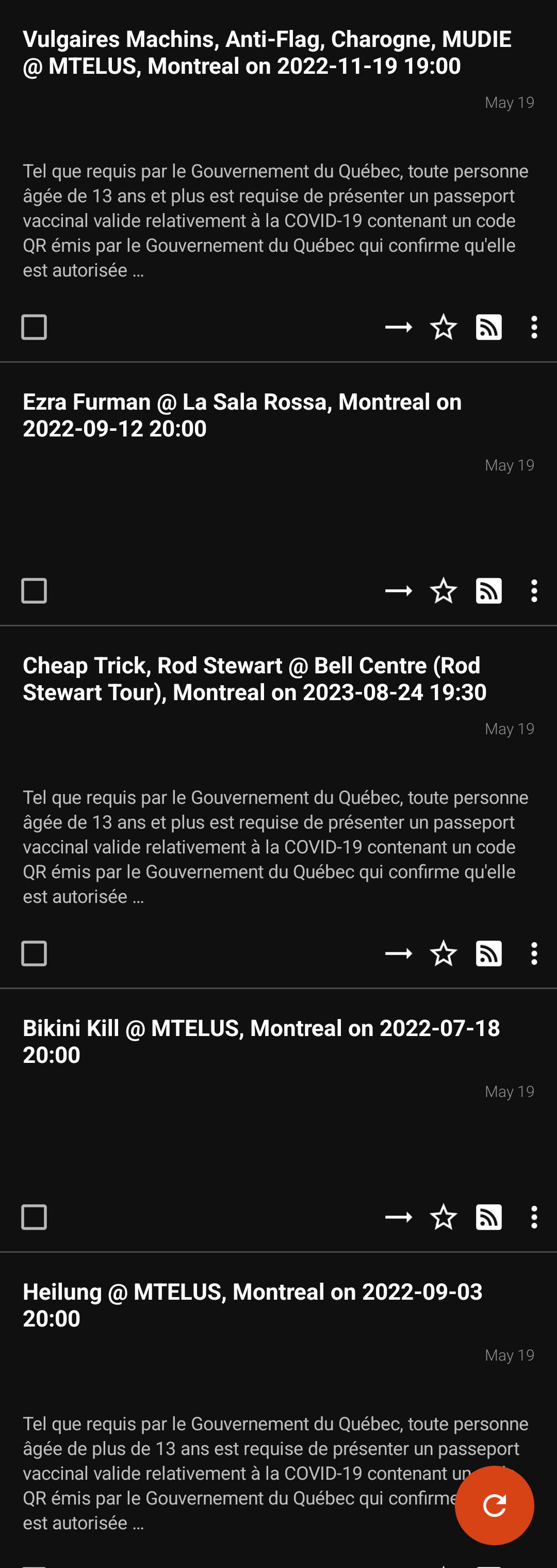

With the end of the year approaching fast, I thought putting my year in

retrospective via music would be a fun thing to do.

Albums

In 2022, I added 51 new albums to my collection nearly one a week! I listed

them below in the order in which I acquired them.

I purchased most of these albums when I could and borrowed the rest at

libraries. If you want to browse though, I added links to the album covers

pointing either to websites where you can buy them or to Discogs when digital

copies weren't available

With the end of the year approaching fast, I thought putting my year in

retrospective via music would be a fun thing to do.

Albums

In 2022, I added 51 new albums to my collection nearly one a week! I listed

them below in the order in which I acquired them.

I purchased most of these albums when I could and borrowed the rest at

libraries. If you want to browse though, I added links to the album covers

pointing either to websites where you can buy them or to Discogs when digital

copies weren't available

I probably would have to get a new motherboard for my desktop probably in a year or two as quite a few motherboards also have WiFi (WiFi 6 ?) think on the southbridge. I at least would have a look in new year and know more as to what s been happening. For last at least 2-3 years there has been a rumor which has been confirmed time and again that the Tata Group has been in talks with multiple vendors to set chip fabrication and testing business but to date they haven t been able to find one. They do keep on giving press conferences about the

I probably would have to get a new motherboard for my desktop probably in a year or two as quite a few motherboards also have WiFi (WiFi 6 ?) think on the southbridge. I at least would have a look in new year and know more as to what s been happening. For last at least 2-3 years there has been a rumor which has been confirmed time and again that the Tata Group has been in talks with multiple vendors to set chip fabrication and testing business but to date they haven t been able to find one. They do keep on giving press conferences about the  QNAPs original internal

USB drive, DOM

QNAPs original internal

USB drive, DOM

a 9pin to USB A adapter

a 9pin to USB A adapter

9pin adapter to USB-A connected with some

more cable

9pin adapter to USB-A connected with some

more cable

Mounted SSD in its external case

Mounted SSD in its external case

The current metalfinder version (

The current metalfinder version (